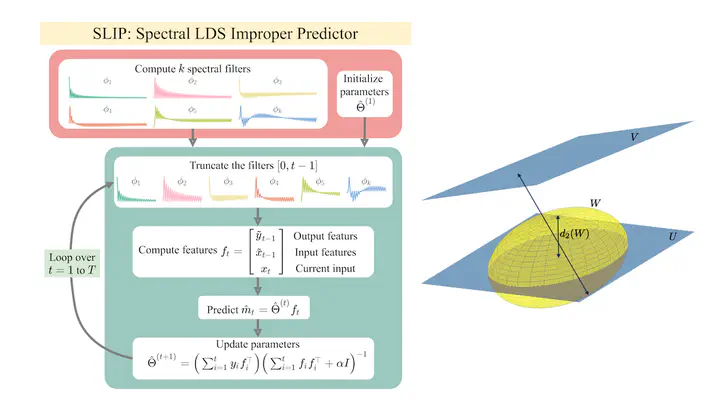

SLIP: Learning to Predict in Unknown Dynamical Systems with Long-Term Memory

Abstract

We present an efficient and practical (polynomial time) algorithm for online prediction in unknown and partially observed linear dynamical systems (LDS) under stochastic noise. When the system parameters are known, the optimal linear predictor is the Kalman filter. However, the performance of existing predictive models is poor in important classes of LDS that are only marginally stable and exhibit long-term forecast memory. We tackle this problem through bounding the generalized Kolmogorov width of the Kalman filter model by spectral methods and conducting tight convex relaxation. We provide a finite-sample analysis, showing that our algorithm competes with Kalman filter in hindsight with only logarithmic regret. Our regret analysis relies on Mendelson’s small-ball method, providing sharp error bounds without concentration, boundedness, or exponential forgetting assumptions. We also give experimental results demonstrating that our algorithm outperforms state-of-the-art methods. Our theoretical and experimental results shed light on the conditions required for efficient probably approximately correct (PAC) learning of the Kalman filter from partially observed data.